Olá,

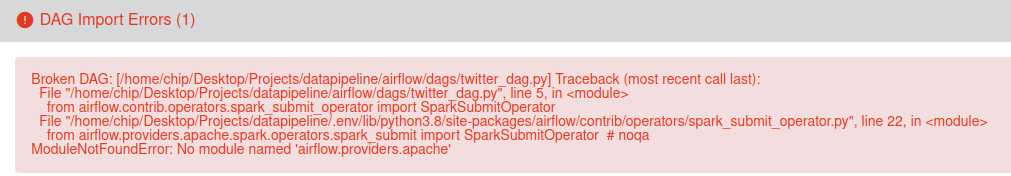

não estou conseguindo importar o dag, ele dá o seguinte erro:

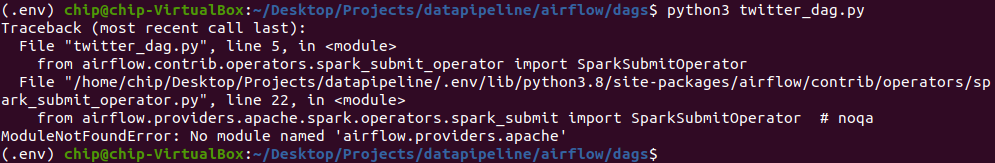

O mesmo erro aparece quando tento executar o dag diretamente no terminal:

O mesmo erro aparece quando tento executar o dag diretamente no terminal:

Segue codigo do dag:

from airflow.models import DAG

from operators.twitter_operator import TwitterOperator

from datetime import datetime, date

from os.path import join

from airflow.contrib.operators.spark_submit_operator import SparkSubmitOperator

with DAG(dag_id="twitter_dag", start_date = datetime.now()) as dag:

twitter_operator = TwitterOperator(

task_id = "twitter_aluraonline",

query="AluraOnline",

file_path=join(

"/home/chip/Desktop/Projects/datapipeline/datalake",

"twitter_aluraonline",

f"extract_date={date.today()}",

f"AluraOnline_{date.today()}.json"

)

)

twitter_transform = SparkSubmitOperator(

task_id='transform_twitter_aluraonline',

application='/home/chip/Desktop/Projects/datapipeline/spark/transformation.py',

name='twitter_transformation',

application_args=(

'--src',

'/home/chip/Desktop/Projects/datapipeline/datalake'

'/bronze/twitter_aluraonline/extract_date=2022-03-12',

'--dest',

'/home/chip/Desktop/Projects/datapipeline/datalake'

'/silver/twitter_aluraonline',

'--process-date',

date.today()

)

)Aproveitando a dúvida, também não consigo selecionar o connection type "spark" na configuracao da conexao, aparentemente está faltando um "Airflow provider package"