Tive o mesmo problema que você.

Não sei se essa é a melhor solução, mas funcionou pra mim.

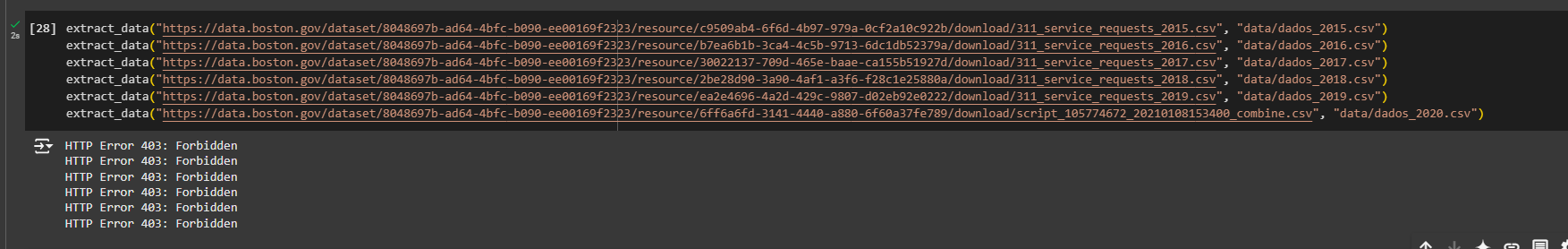

Primeiro, eu notei que as urls estavam diferentes no site (talvez alguma versão atualizada), mas mesmo assim não funcionou.

Depois, atualizei as urls com as mais atuais de cada ano, e com a ajuda do chatGPT cheguei a esse código e rodou perfeitamente:

import requests

import os

def extract_data(url, local_file):

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

}

try:

response = requests.get(url, headers=headers)

response.raise_for_status() # Raise HTTPError for bad responses

# Ensure the local directory exists

os.makedirs(os.path.dirname(local_file), exist_ok=True)

# Save the content to the specified local file

with open(local_file, 'wb') as file:

file.write(response.content)

print(f"Data saved to {local_file}")

except requests.exceptions.HTTPError as e:

print(f"HTTP Error: {e}")

except Exception as e:

print(f"Error: {e}")

# Test with your provided URLs

urls = [

("https://data.boston.gov/dataset/8048697b-ad64-4bfc-b090-ee00169f2323/resource/c9509ab4-6f6d-4b97-979a-0cf2a10c922b/download/tmphrybkxuh.csv", "data/dados_2015.csv"),

("https://data.boston.gov/dataset/8048697b-ad64-4bfc-b090-ee00169f2323/resource/b7ea6b1b-3ca4-4c5b-9713-6dc1db52379a/download/tmpzxzxeqfb.csv", "data/dados_2016.csv"),

("https://data.boston.gov/dataset/8048697b-ad64-4bfc-b090-ee00169f2323/resource/30022137-709d-465e-baae-ca155b51927d/download/tmpzccn8u4q.csv", "data/dados_2017.csv"),

("https://data.boston.gov/dataset/8048697b-ad64-4bfc-b090-ee00169f2323/resource/2be28d90-3a90-4af1-a3f6-f28c1e25880a/download/tmp7602cia8.csv", "data/dados_2018.csv"),

("https://data.boston.gov/dataset/8048697b-ad64-4bfc-b090-ee00169f2323/resource/ea2e4696-4a2d-429c-9807-d02eb92e0222/download/tmpcje3ep_w.csv", "data/dados_2019.csv"),

("https://data.boston.gov/dataset/8048697b-ad64-4bfc-b090-ee00169f2323/resource/6ff6a6fd-3141-4440-a880-6f60a37fe789/download/tmpcv_10m2s.csv", "data/dados_2020.csv"),

]

for url, file in urls:

extract_data(url, file)